Lost In Local Optima

How life-benefitting, habit-forming apps wrong users

Introduction

We use apps that attempt to manipulate us.

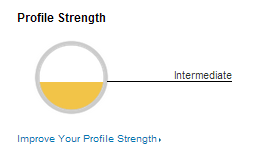

LinkedIn’s profile strength indicator is a nice example of this.¹ It leverages the endowed progress effect to get people to fill out their profiles, and it looks like this:

When you first create your profile with no information, your profile strength is represented as a non-empty circle because some research² suggests that giving users a sense of a head-start on a task makes them more likely to complete that task, even if that head-start is artificial. In other words, if LinkedIn’s profile strength indicator started as an empty circle, we’d be less likely — all other things being equal — to add information to our profile.

Although manipulating people is prima facie immoral, some of us work on apps that use similar techniques to manipulate people. Serious attempts have been made to articulate a moral principle that justifies work on manipulative products, and most of them come down to the following principle:

The better life principle: If my product makes my user’s life meaningfully better, then I’m justified in using product design techniques that manipulate them.

The better life principle (BLP) is probably false, and most of us who are working on manipulative products are probably wronging our users.

The gist of my argument for this is that although some products may make users’ lives better, users may be able to find better ways of improving their lives, and it is wrong to deprive users of that opportunity by subverting their rational faculties with manipulative products.

This interactive essay is a clarification and defense of this argument.

Argument, Objections, and Replies

Here’s the first premise of argument:

(1) It’s wrong to deprive people the opportunity to find the best paths to happiness, even if we are pushing them down a path that makes their lives better.

I think (1) is pretty intuitive. Check your intuitions on this thought experiment: Suppose I want a red car, but a car salesman manipulates me to choose a blue car instead of a red one for his gain. Although my life is materially improved (I have a car now), his actions are intuitively not morally kosher, right? I think the principle underlying this intuition is basically (1).

Notes

- Nir Eyal, Hooked: How to build habit-forming products, 90. The “endowed progress effect” is also discussed in the Heath Brother’s Switch: How to change things when change is hard.

- Joseph C. Nunes and Xavier Dreze, “The Endowed Progress Effect: How Artificial Advancement Increases Effort.”

- This is probably even more true with less professional social media apps like Instagram and Facebook.

- Technically, this response doesn’t completely dispel the objection that most products aren’t manipulative in the problematic way of I’ve suggested here. It really only addresses some thinking around the particular LinkedIn example I’ve used. If someone presses me on this, we can talk more.